llama-cpp-python : インストール (GPU)2024/02/21 |

|

Meta の Llama (Large Language Model Meta AI) モデルのインターフェースである [llama.cpp] の Python バインディング [llama-cpp-python] をインストールします。 |

|

| [1] | |

| [2] | |

| [3] |

cuDNN (CUDA Deep Neural Network library) をダウンロードしてインストールします。 ⇒ https://docs.nvidia.com/deeplearning/cudnn/reference/support-matrix.html |

Windows PowerShell Copyright (C) Microsoft Corporation. All rights reserved. PS C:\Users\Administrator> Invoke-WebRequest -Uri https://developer.download.nvidia.com/compute/cudnn/9.0.0/local_installers/cudnn_9.0.0_windows.exe -OutFile "cudnn_9.0.0_windows.exe" # サイレントモードでインストール PS C:\Users\Administrator> ./cudnn_9.0.0_windows.exe -s # インストールプロセスが起動 PS C:\Users\Administrator> Get-Process -Name "cud*", "setup*" Handles NPM(K) PM(K) WS(K) CPU(s) Id SI ProcessName ------- ------ ----- ----- ------ -- -- ----------- 329 19 3144 15580 67.41 1252 0 cudnn_9.0.0_windows 425 22 10604 24400 2.31 2136 0 setup # 上記プロセスが終了すればインストール完了 PS C:\Users\Administrator> Get-Process -Name "cud*", "setup*" # 追加で JSON データを見やすくできる jq.exe もダウンロード PS C:\Users\Administrator> Invoke-WebRequest -Uri https://github.com/jqlang/jq/releases/download/jq-1.7.1/jq-windows-amd64.exe -OutFile "C:\WINDOWS\system32\jq.exe" |

| [4] | [llama-cpp-python] をインストールします。 |

# エクステンション コピー PS C:\Users\Administrator> Copy-Item "C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v12.3\extras\visual_studio_integration\MSBuildExtensions\*" "C:\Program Files (x86)\Microsoft Visual Studio\2022\BuildTools\MSBuild\Microsoft\VC\v170\BuildCustomizations\" PS C:\Users\Administrator> $env:LLAMA_CUBLAS=1 PS C:\Users\Administrator> $env:FORCE_CMAKE=1 PS C:\Users\Administrator> $env:CMAKE_ARGS="-DLLAMA_CUBLAS=on" PS C:\Users\Administrator> pip3 install llama-cpp-python[server] Collecting llama-cpp-python[server] Using cached llama_cpp_python-0.2.44.tar.gz (36.6 MB) Installing build dependencies ... done Getting requirements to build wheel ... done Installing backend dependencies ... done Preparing wheel metadata ... done ..... ..... Successfully installed MarkupSafe-2.1.5 annotated-types-0.6.0 anyio-4.3.0 click-8.1.7 colorama-0.4.6 diskcache-5.6.3 exceptiongroup-1.2.0 fastapi-0.109.2 h11-0.14.0 jinja2-3.1.3 llama-cpp-python-0.2.44 numpy-1.26.4 pydantic-2.6.1 pydantic-core-2.16.2 pydantic-settings-2.2.1 python-dotenv-1.0.1 sniffio-1.3.0 sse-starlette-2.0.0 starlette-0.36.3 starlette-context-0.3.6 typing-extensions-4.9.0 uvicorn-0.27.1 |

| [5] |

[llama.cpp] で使用可能な GGUF 形式のモデルをダウンロードして、[llama-cpp-python] を起動します。 ⇒ https://huggingface.co/TheBloke/Llama-2-13B-chat-GGUF/tree/main ⇒ https://huggingface.co/TheBloke/Llama-2-70B-Chat-GGUF/tree/main |

PS C:\Users\Administrator> Invoke-WebRequest -Uri https://huggingface.co/TheBloke/Llama-2-13B-chat-GGUF/resolve/main/llama-2-13b-chat.Q4_K_M.gguf -OutFile "llama-2-13b-chat.Q4_K_M.gguf" # ファイアウォールルール追加 PS C:\Users\Administrator> New-NetFirewallRule ` -Name "Llama-cpp Server Port" ` -DisplayName "Llama-cpp Server Port" ` -Description 'Allow Llama-cpp Server Port' ` -Profile Any ` -Direction Inbound ` -Action Allow ` -Protocol TCP ` -Program Any ` -LocalAddress Any ` -LocalPort 8000 # [--n_gpu_layers] : GPU に配置するレイヤーの数 # -- よくわからない場合は [-1] を指定 PS C:\Users\Administrator> python -m llama_cpp.server --model ./llama-2-13b-chat.Q4_K_M.gguf --n_gpu_layers -1 --host 0.0.0.0 --port 8000 ggml_init_cublas: GGML_CUDA_FORCE_MMQ: no ggml_init_cublas: CUDA_USE_TENSOR_CORES: yes ggml_init_cublas: found 1 CUDA devices: Device 0: NVIDIA GeForce RTX 3060, compute capability 8.6, VMM: yes llama_model_loader: loaded meta data with 19 key-value pairs and 363 tensors from ./llama-2-13b-chat.Q4_K_M.gguf (version GGUF V2) llama_model_loader: Dumping metadata keys/values. Note: KV overrides do not apply in this output. llama_model_loader: - kv 0: general.architecture str = llama llama_model_loader: - kv 1: general.name str = LLaMA v2 llama_model_loader: - kv 2: llama.context_length u32 = 4096 llama_model_loader: - kv 3: llama.embedding_length u32 = 5120 llama_model_loader: - kv 4: llama.block_count u32 = 40 llama_model_loader: - kv 5: llama.feed_forward_length u32 = 13824 llama_model_loader: - kv 6: llama.rope.dimension_count u32 = 128 llama_model_loader: - kv 7: llama.attention.head_count u32 = 40 llama_model_loader: - kv 8: llama.attention.head_count_kv u32 = 40 llama_model_loader: - kv 9: llama.attention.layer_norm_rms_epsilon f32 = 0.000010 llama_model_loader: - kv 10: general.file_type u32 = 15 llama_model_loader: - kv 11: tokenizer.ggml.model str = llama llama_model_loader: - kv 12: tokenizer.ggml.tokens arr[str,32000] = ["<unk>", "<s>", "</s>", "<0x00>", "<... llama_model_loader: - kv 13: tokenizer.ggml.scores arr[f32,32000] = [0.000000, 0.000000, 0.000000, 0.0000... llama_model_loader: - kv 14: tokenizer.ggml.token_type arr[i32,32000] = [2, 3, 3, 6, 6, 6, 6, 6, 6, 6, 6, 6, ... llama_model_loader: - kv 15: tokenizer.ggml.bos_token_id u32 = 1 llama_model_loader: - kv 16: tokenizer.ggml.eos_token_id u32 = 2 llama_model_loader: - kv 17: tokenizer.ggml.unknown_token_id u32 = 0 llama_model_loader: - kv 18: general.quantization_version u32 = 2 llama_model_loader: - type f32: 81 tensors llama_model_loader: - type q4_K: 241 tensors llama_model_loader: - type q6_K: 41 tensors llm_load_vocab: special tokens definition check successful ( 259/32000 ). llm_load_print_meta: format = GGUF V2 llm_load_print_meta: arch = llama llm_load_print_meta: vocab type = SPM llm_load_print_meta: n_vocab = 32000 llm_load_print_meta: n_merges = 0 llm_load_print_meta: n_ctx_train = 4096 llm_load_print_meta: n_embd = 5120 llm_load_print_meta: n_head = 40 llm_load_print_meta: n_head_kv = 40 llm_load_print_meta: n_layer = 40 llm_load_print_meta: n_rot = 128 llm_load_print_meta: n_embd_head_k = 128 llm_load_print_meta: n_embd_head_v = 128 llm_load_print_meta: n_gqa = 1 llm_load_print_meta: n_embd_k_gqa = 5120 llm_load_print_meta: n_embd_v_gqa = 5120 llm_load_print_meta: f_norm_eps = 0.0e+00 llm_load_print_meta: f_norm_rms_eps = 1.0e-05 llm_load_print_meta: f_clamp_kqv = 0.0e+00 llm_load_print_meta: f_max_alibi_bias = 0.0e+00 llm_load_print_meta: n_ff = 13824 llm_load_print_meta: n_expert = 0 llm_load_print_meta: n_expert_used = 0 llm_load_print_meta: rope scaling = linear llm_load_print_meta: freq_base_train = 10000.0 llm_load_print_meta: freq_scale_train = 1 llm_load_print_meta: n_yarn_orig_ctx = 4096 llm_load_print_meta: rope_finetuned = unknown llm_load_print_meta: model type = 13B llm_load_print_meta: model ftype = Q4_K - Medium llm_load_print_meta: model params = 13.02 B llm_load_print_meta: model size = 7.33 GiB (4.83 BPW) llm_load_print_meta: general.name = LLaMA v2 llm_load_print_meta: BOS token = 1 '<s>' llm_load_print_meta: EOS token = 2 '</s>' llm_load_print_meta: UNK token = 0 '<unk>' llm_load_print_meta: LF token = 13 '<0x0A>' llm_load_tensors: ggml ctx size = 0.28 MiB llm_load_tensors: offloading 40 repeating layers to GPU llm_load_tensors: offloading non-repeating layers to GPU llm_load_tensors: offloaded 41/41 layers to GPU llm_load_tensors: CPU buffer size = 87.89 MiB llm_load_tensors: CUDA0 buffer size = 7412.96 MiB .................................................................................................... llama_new_context_with_model: n_ctx = 2048 llama_new_context_with_model: freq_base = 10000.0 llama_new_context_with_model: freq_scale = 1 llama_kv_cache_init: CUDA0 KV buffer size = 1600.00 MiB llama_new_context_with_model: KV self size = 1600.00 MiB, K (f16): 800.00 MiB, V (f16): 800.00 MiB llama_new_context_with_model: CUDA_Host input buffer size = 15.01 MiB llama_new_context_with_model: CUDA0 compute buffer size = 204.00 MiB llama_new_context_with_model: CUDA_Host compute buffer size = 10.00 MiB llama_new_context_with_model: graph splits (measure): 3 AVX = 1 | AVX_VNNI = 0 | AVX2 = 1 | AVX512 = 0 | AVX512_VBMI = 0 | AVX512_VNNI = 0 | FMA = 1 | NEON = 0 | ARM_FMA = 0 | F16C = 1 | FP16_VA = 0 | WASM_SIMD = 0 | BLAS = 1 | SSE3 = 1 | SSSE3 = 0 | VSX = 0 | MATMUL_INT8 = 0 | Model metadata: {'general.name': 'LLaMA v2', 'general.architecture': 'llama', 'llama.context_length': '4096', 'llama.rope.dimension_count': '128', 'llama.embedding_length': '5120', 'llama.block_count': '40', 'llama.feed_forward_length': '13824', 'llama.attention.head_count': '40', 'tokenizer.ggml.eos_token_id': '2', 'general.file_type': '15', 'llama.attention.head_count_kv': '40', 'llama.attention.layer_norm_rms_epsilon': '0.000010', 'tokenizer.ggml.model': 'llama', 'general.quantization_version': '2', 'tokenizer.ggml.bos_token_id': '1', 'tokenizer.ggml.unknown_token_id': '0'} INFO: Started server process [3912] INFO: Waiting for application startup. INFO: Application startup complete. INFO: Uvicorn running on http://0.0.0.0:8000 (Press CTRL+C to quit) |

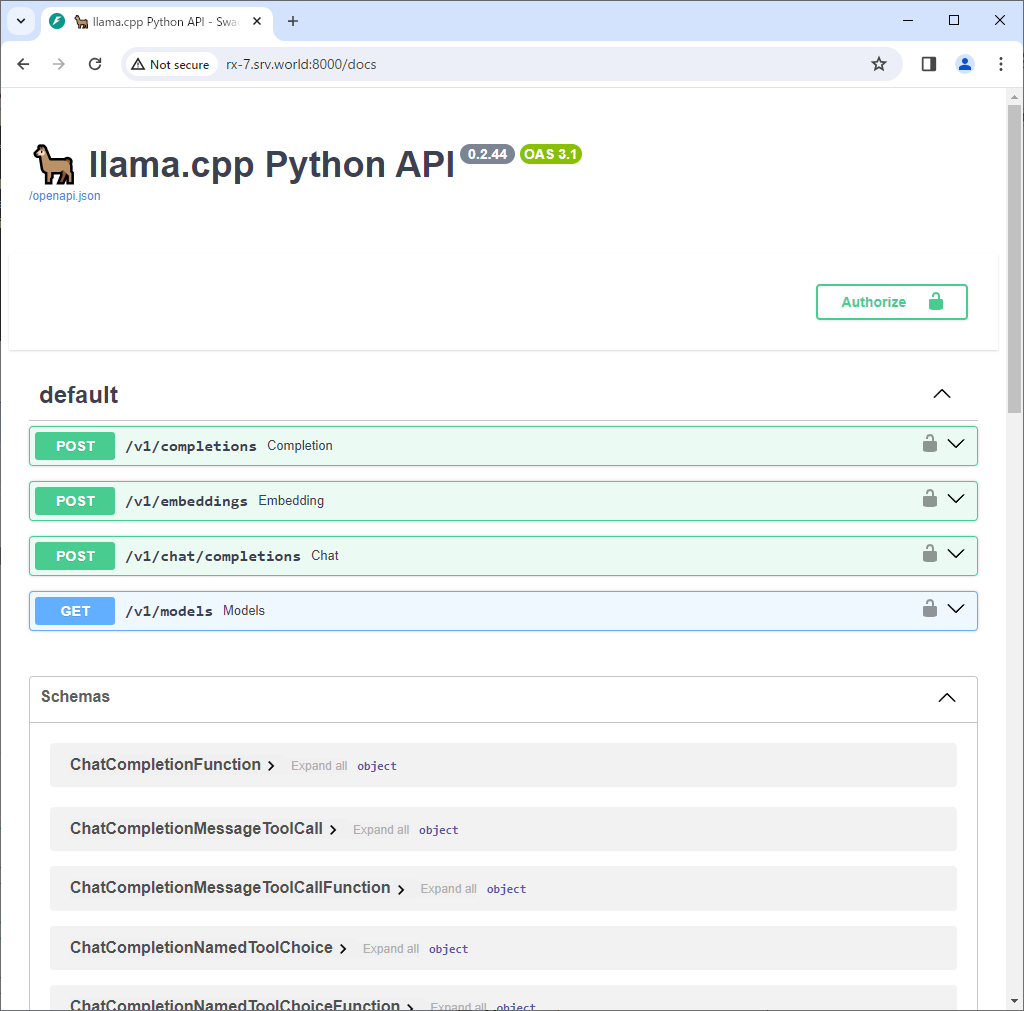

| [6] | ローカルネットワーク内の任意のコンピューターから [http://(サーバーのホスト名 または IP アドレス):8000/docs] にアクセスすると、ドキュメントを参照することができます。 |

|

| [7] | 簡単な質問を投入して動作確認します。 質問の内容や使用しているモデルによって、応答時間や応答内容は変わります。 ちなみに、当例では、8 vCPU + 16G メモリ + GeForce RTX 3060 (12G) のマシンで実行しています。 |

# 円周率 20桁まで教えて PS C:\Users\Administrator> curl.exe -s -XPOST -H 'Content-Type: application/json' localhost:8000/v1/chat/completions ` -d '{\"messages\": [{\"role\": \"user\", \"content\": \"Tell me pi to 20 digits.\"}]}' | jq.exe { "id": "chatcmpl-eca1093a-9acf-47b6-9314-3b596d583dae", "object": "chat.completion", "created": 1708511131, "model": "./llama-2-13b-chat.Q4_K_M.gguf", "choices": [ { "index": 0, "message": { "content": " Sure! Pi (??) is an irrational number, which means it cannot be expressed as a finite decimal or fraction. It is approximately equal to 3.14159, but it is a transcendental number, which means that it is not a root of any polynomial equation with rational coefficients, and its decimal representation goes on indefinitely in a seemingly random pattern. Here are the first 20 digits of pi:\n\n3.141592653589793\n\nPlease note that the number of digits I provided is limited by the size of the integer type used in your computer's architecture. In other words, the number of digits that can be stored in a given amount of memory is limited by the word size of the computer's processor. For example, on a 32-bit computer, the maximum value that can be stored in an integer type is 2^31-1 = 2147483647. Therefore, the number of digits I provided may not be the exact value of pi to that many decimal places. However, the value of pi is an irrational number and its decimal representation goes on indefinitely, so there is no practical limit to the number of digits that can be stored or computed.", "role": "assistant" }, "finish_reason": "stop" } ], "usage": { "prompt_tokens": 21, "completion_tokens": 278, "total_tokens": 299 } } # 月まで行くのにどれくらい時間がかかる? PS C:\Users\Administrator> curl.exe -s -XPOST -H 'Content-Type: application/json' localhost:8000/v1/chat/completions ` -d '{\"messages\": [{\"role\": \"user\", \"content\": \"How long does it take to go to the moon?\"}]}' | jq.exe { "id": "chatcmpl-f195fe2e-1144-48df-8ee6-b44fd1508d39", "object": "chat.completion", "created": 1708511375, "model": "./llama-2-13b-chat.Q4_K_M.gguf", "choices": [ { "index": 0, "message": { "content": " It's not possible for humans to travel to the moon, as the moon is a celestial body that is too far away from Earth to be reached by any mode of transportation. The moon is approximately 239,000 miles (384,000 kilometers) away from Earth, and the fastest spacecraft ever built, NASA's Parker Solar Probe, has a top speed of just over 150,000 miles per hour (240,000 kilometers per hour).\n\nAt this speed, it would take the Parker Solar Probe over 18 years to reach the moon. However, the spacecraft is not designed to carry human passengers, and even if it were possible to build a spacecraft capable of reaching the moon in a reasonable amount of time, the journey would be extremely dangerous and would require a tremendous amount of resources and technological advancements.\n\nTherefore, it is currently not possible for humans to travel to the moon, and any claims or promises of such travel should be viewed with skepticism.", "role": "assistant" }, "finish_reason": "stop" } ], "usage": { "prompt_tokens": 22, "completion_tokens": 235, "total_tokens": 257 } } |

関連コンテンツ