Open WebUI : Install2024/06/04 |

|

Install Open WebUI which allows you to run LLM on Web UI. Open WebUI can be easily installed with pip3, but as of June 2024, the default version of Python 3.12 on Ubuntu 24.04 and related modules are not fully compatible with the version required by Open WebUI (3.12.0a1,>=3.11), so in this example, we will start it in a container. |

|

| [1] | |

| [2] | |

| [3] | Pull and start the Open WebUI container image. |

|

root@dlp:~# podman pull ghcr.io/open-webui/open-webui:main root@dlp:~# podman images REPOSITORY TAG IMAGE ID CREATED SIZE ghcr.io/open-webui/open-webui main 33cf9650630d 4 hours ago 3.58 GB

root@dlp:~#

root@dlp:~# podman run -d -p 3000:8080 --security-opt apparmor=unconfined --add-host=host.containers.internal:host-gateway -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:main

podman ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 118ba00d45a8 ghcr.io/open-webui/open-webui:main bash start.sh 19 minutes ago Up 16 minutes 0.0.0.0:3000->8080/tcp open-webui |

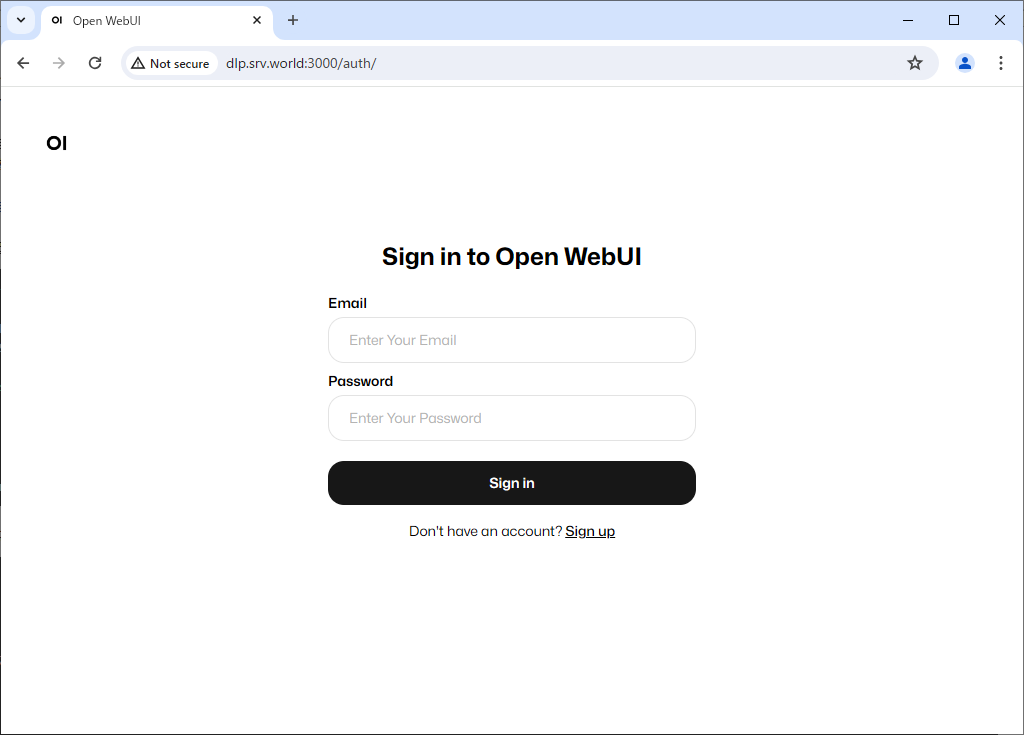

| [4] | Start any web browser on client computer and access to Open WebUI. When you access the application, the following screen will appear. For initial accessing to the application, click [Sign up] to register as a user. |

|

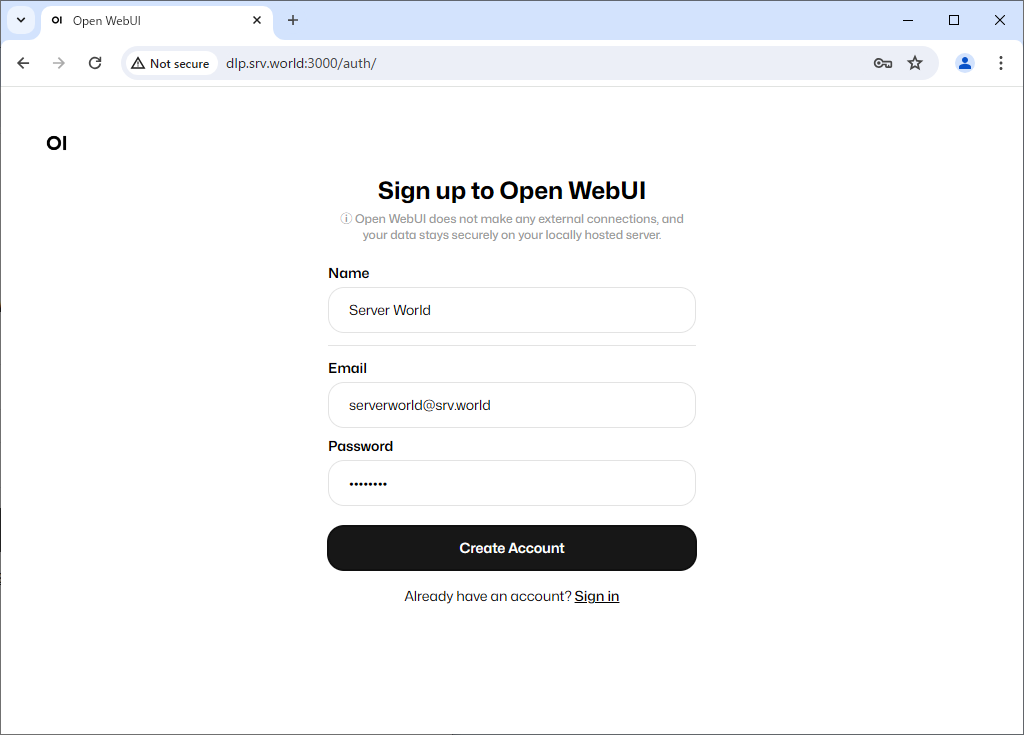

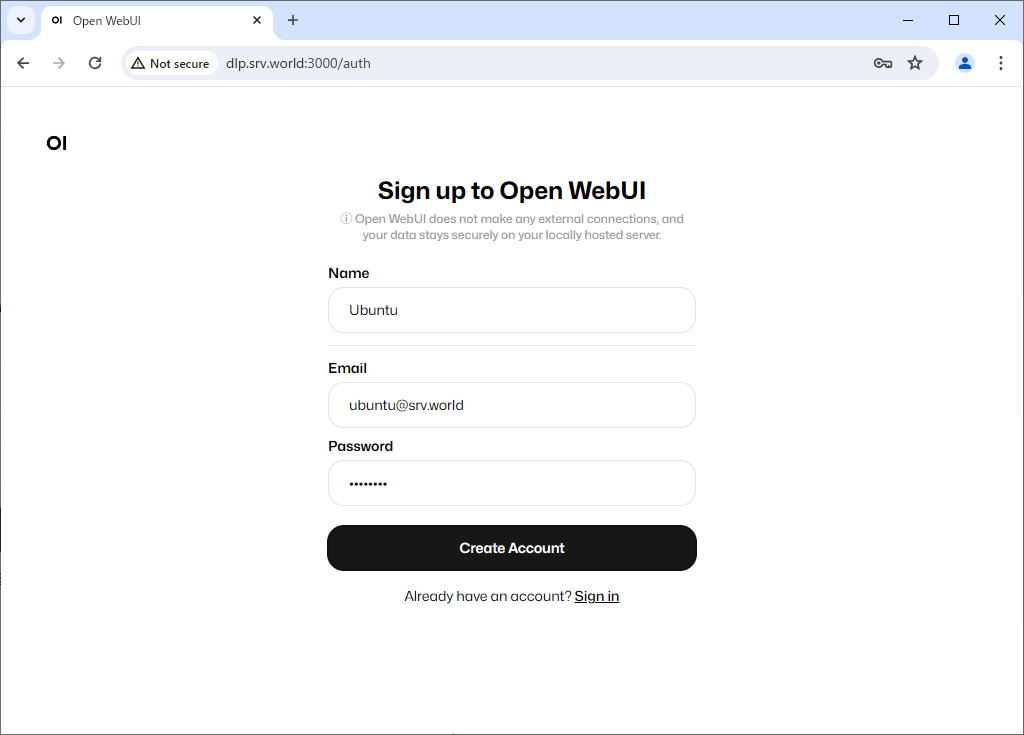

| [5] | Enter the required information and click [Create Account]. The first user to register will automatically become an administrator account. |

|

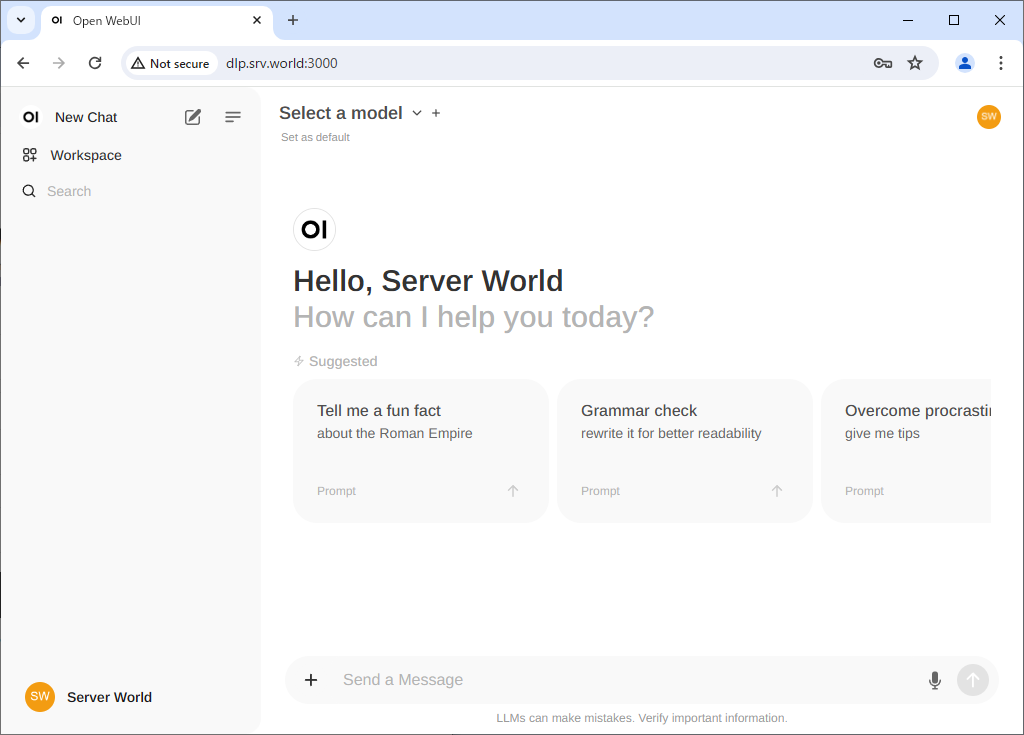

| [6] | Once your account is created, the Open WebUI default page will be displayed. |

|

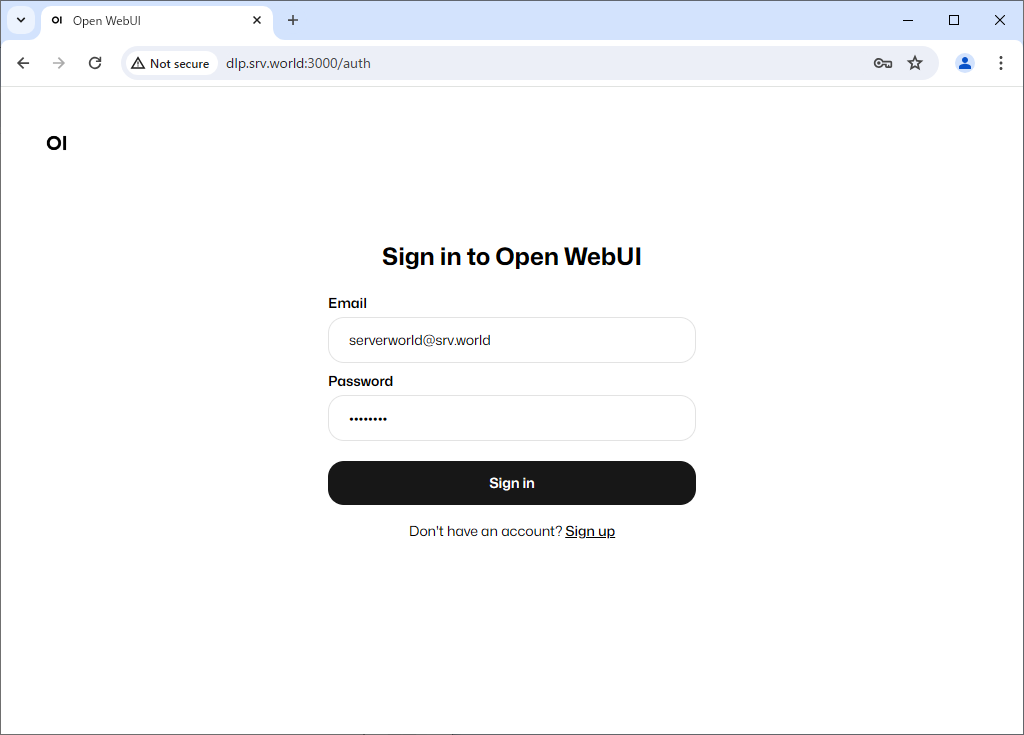

| [7] | Next time, you can log in with your registered email address and password. |

|

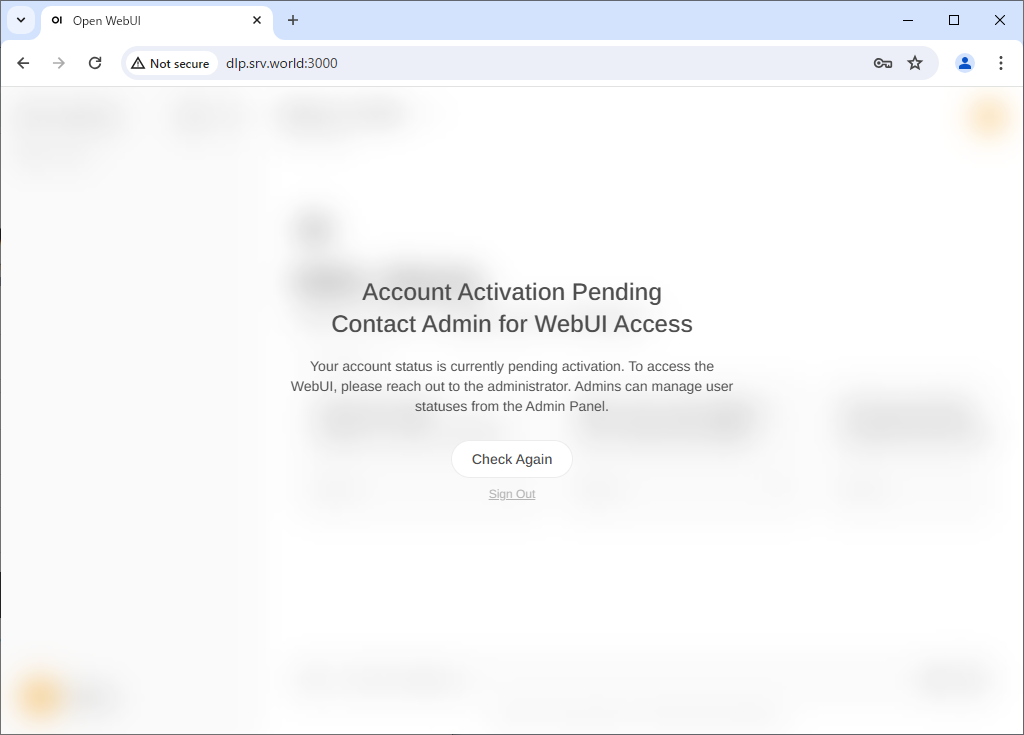

| [8] | For second and subsequent users, when you register by clicking [Sign up], the account will be in Pending status and must be approved by an administrator account. |

|

|

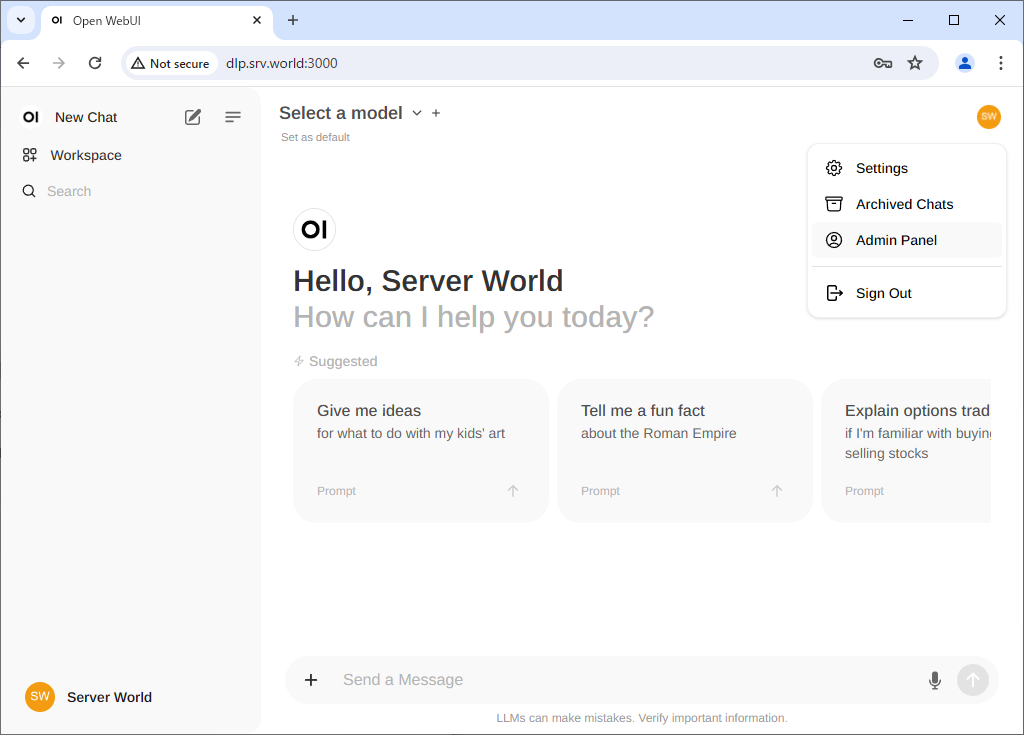

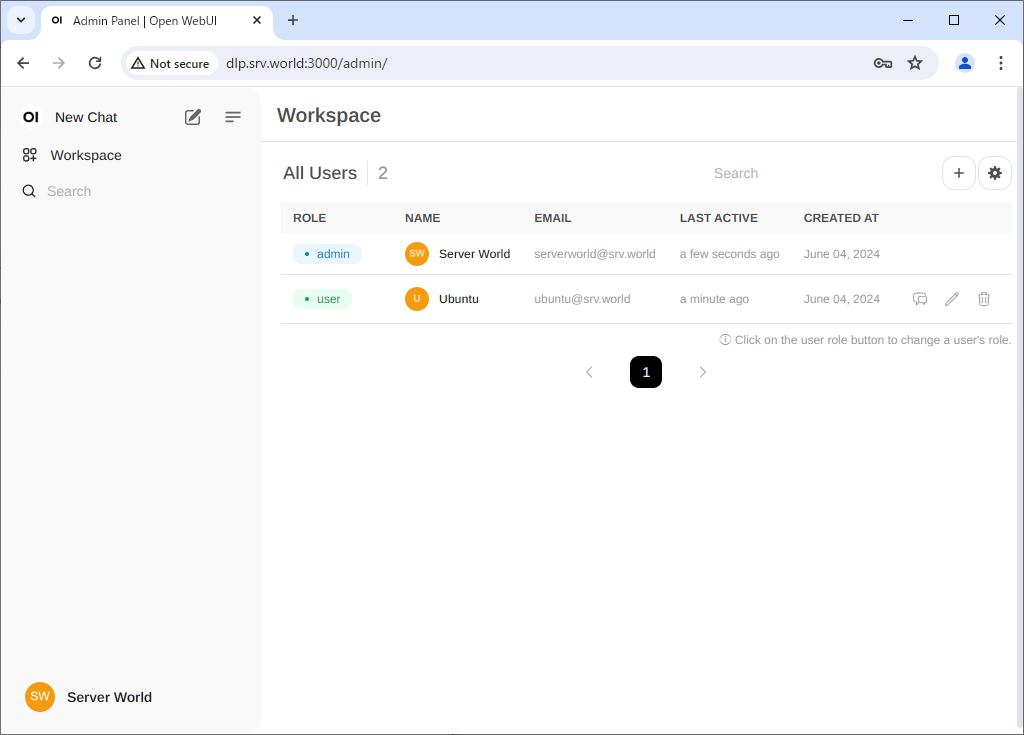

| [9] | To approve a new user, log in with your administrator account and click Admin Panel from the menu in the top right. |

|

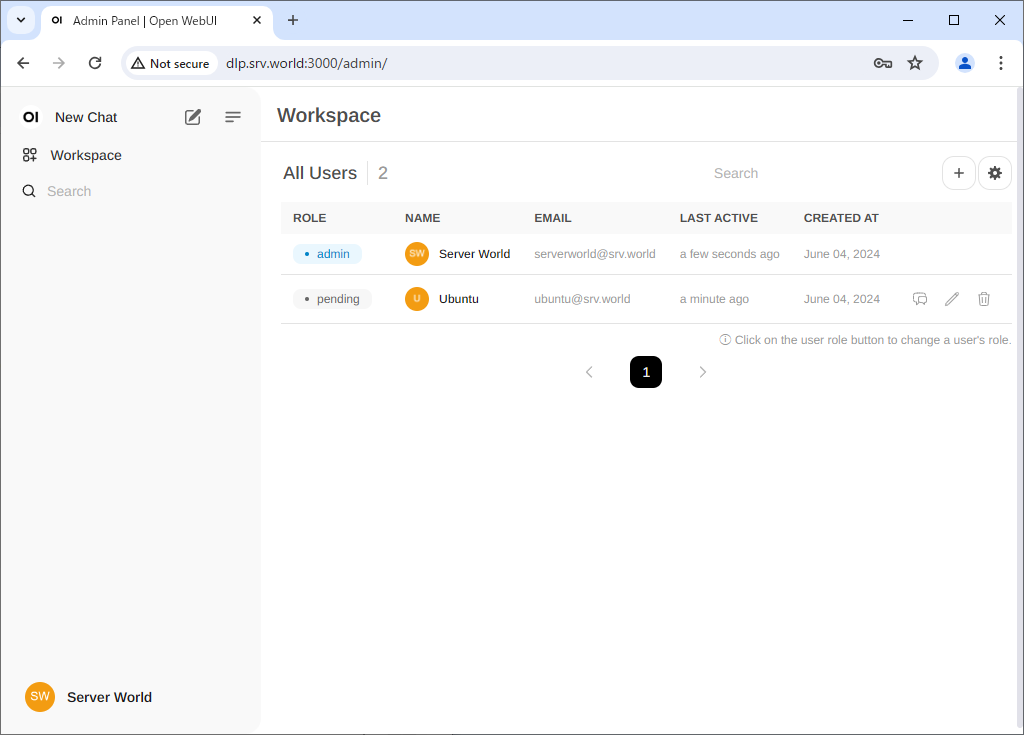

| [10] | If you click [pending] on a user in [Pending] status, that user will be approved. If you click [user] again, that user can be promoted to [Admin]. |

|

|

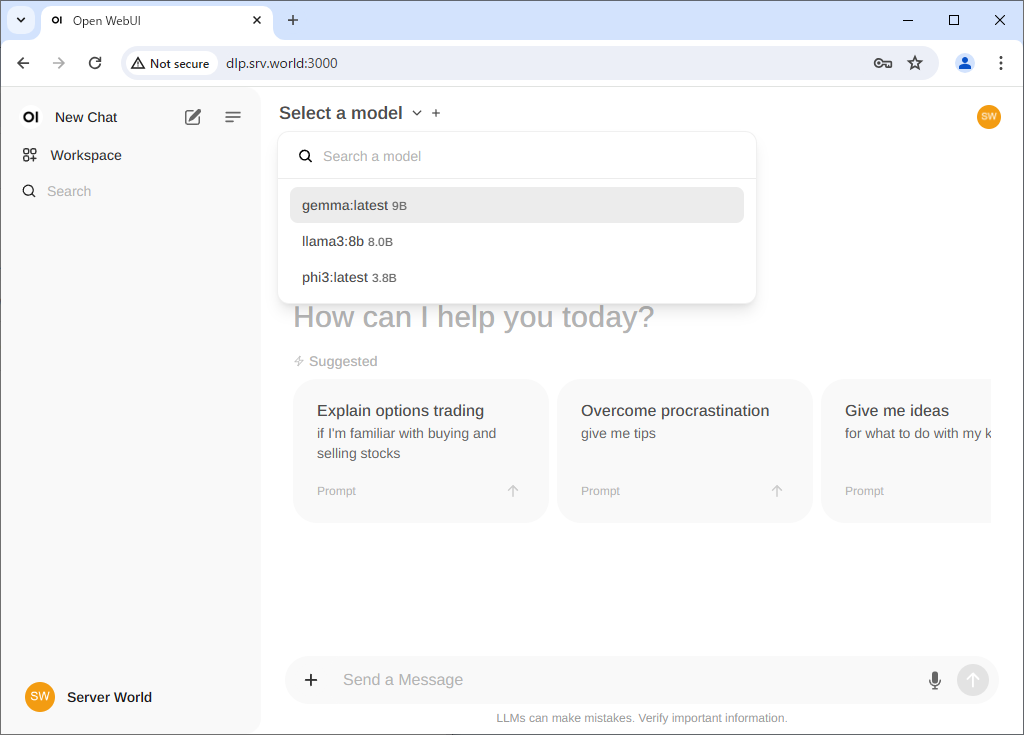

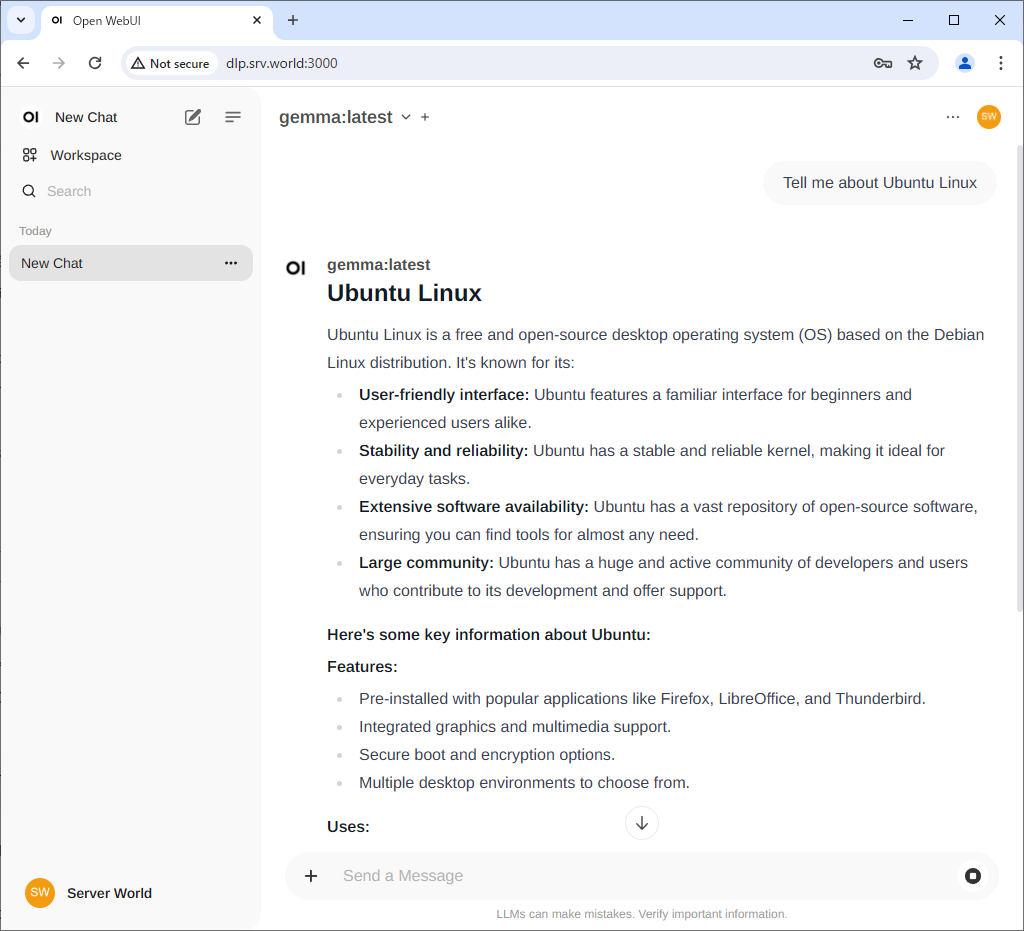

| [11] | To use Chat, select the model you have loaded into Ollama from the menu at the top, enter a message in the box below, and you will receive a reply. |

|

|

Matched Content