TensorFlow : Use Docker Image2024/06/21 |

|

Install TensorFlow which is the Machine Learning Library.

On this example, Install TensorFlow official Docker Image with GPU support and run it on Containers.

|

|

| [1] | |

| [2] | This is the example to use TensorFlow Docker (GPU). |

|

root@dlp:~#

root@dlp:~# docker pull tensorflow/tensorflow:latest-gpu

docker images REPOSITORY TAG IMAGE ID CREATED SIZE tensorflow/tensorflow latest-gpu 21df1084f706 3 months ago 7.35GB # verify to run [nvidia-smi] root@dlp:~# docker run --gpus all --rm tensorflow/tensorflow:latest-gpu nvidia-smi Fri Jun 21 06:33:13 2024 +-----------------------------------------------------------------------------------------+ | NVIDIA-SMI 550.90.07 Driver Version: 550.90.07 CUDA Version: 12.4 | |-----------------------------------------+------------------------+----------------------+ | GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC | | Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. | | | | MIG M. | |=========================================+========================+======================| | 0 NVIDIA GeForce RTX 3060 Off | 00000000:05:00.0 Off | N/A | | 30% 40C P0 35W / 170W | 1MiB / 12288MiB | 0% Default | | | | N/A | +-----------------------------------------+------------------------+----------------------+ +-----------------------------------------------------------------------------------------+ | Processes: | | GPU GI CI PID Type Process name GPU Memory | | ID ID Usage | |=========================================================================================| | No running processes found | +-----------------------------------------------------------------------------------------+ # verify to run TensorFlow root@dlp:~# docker run --gpus all --rm tensorflow/tensorflow:latest-gpu \ python -c "import tensorflow as tf; print(tf.reduce_sum(tf.random.normal([1000, 1000])))" 2024-06-21 06:34:05.368713: I tensorflow/core/platform/cpu_feature_guard.cc:210] This TensorFlow binary is optimized to use available CPU instructions in performance-critical operations. To enable the following instructions: AVX2 FMA, in other operations, rebuild TensorFlow with the appropriate compiler flags. 2024-06-21 06:34:07.722550: I external/local_xla/xla/stream_executor/cuda/cuda_executor.cc:998] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 2024-06-21 06:34:07.734896: I external/local_xla/xla/stream_executor/cuda/cuda_executor.cc:998] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 2024-06-21 06:34:07.735184: I external/local_xla/xla/stream_executor/cuda/cuda_executor.cc:998] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 2024-06-21 06:34:07.736129: I external/local_xla/xla/stream_executor/cuda/cuda_executor.cc:998] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 2024-06-21 06:34:07.736392: I external/local_xla/xla/stream_executor/cuda/cuda_executor.cc:998] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 2024-06-21 06:34:07.736620: I external/local_xla/xla/stream_executor/cuda/cuda_executor.cc:998] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 2024-06-21 06:34:07.936372: I external/local_xla/xla/stream_executor/cuda/cuda_executor.cc:998] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 2024-06-21 06:34:07.936678: I external/local_xla/xla/stream_executor/cuda/cuda_executor.cc:998] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 2024-06-21 06:34:07.937006: I external/local_xla/xla/stream_executor/cuda/cuda_executor.cc:998] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 2024-06-21 06:34:07.937295: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1928] Created device /job:localhost/replica:0/task:0/device:GPU:0 with 10394 MB memory: -> device: 0, name: NVIDIA GeForce RTX 3060, pci bus id: 0000:05:00.0, compute capability: 8.6 tf.Tensor(2207.085, shape=(), dtype=float32) |

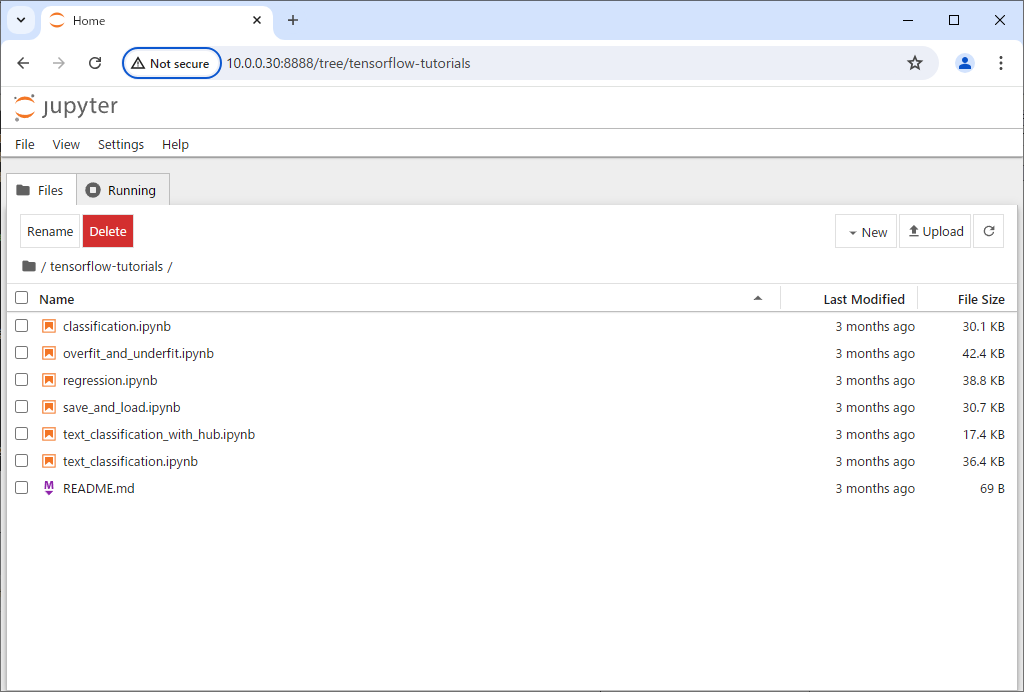

| [3] | Install TensorFlow Docker Image with Jupyter Notebook. |

|

root@dlp:~#

root@dlp:~# docker pull tensorflow/tensorflow:latest-jupyter

docker images REPOSITORY TAG IMAGE ID CREATED SIZE tensorflow/tensorflow latest-jupyter 7428e0989e67 3 months ago 2.16GB tensorflow/tensorflow latest-gpu 21df1084f706 3 months ago 7.35GB # run container as daemon root@dlp:~# docker run -dt -p 8888:8888 tensorflow/tensorflow:latest-jupyter a1b34d93925d27bcd792cbf390ef3481e0d147d20e7b4d565a4462737da25c16root@dlp:~# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES a1b34d93925d tensorflow/tensorflow:latest-jupyter "bash -c 'source /et…" 19 seconds ago Up 18 seconds 0.0.0.0:8888->8888/tcp, :::8888->8888/tcp eager_brahmagupta # confirm URL root@dlp:~# docker exec a1b34d93925d bash -c "jupyter notebook list" Currently running servers: http://a1b34d93925d:8888/?token=5317ae138be568ea78853997a1134b2c4c2b7719a99d2e33 :: /tf |

| Access to the URL above, then it's possible to use Jupyter Notebook. |

|

Matched Content